Ronon Bouroullec's sensitive use of concrete makes Brutalism look, well, brutish. His Ancora collection of tables seem impossibly light and airy.

"The collection of tables and low tables owes its name to the section of the base. The curved edge joins the structural element of the rib creating the shape of an anchor (in Italian 'ancora'), a design that balances aesthetics and function."

The tables are available as standalone units, suitable for both indoor and outdoor…

…and as bases for tables topped in either glass, concrete sheets or (boo) MDF with oak veneer.

The Ancora collection is in production by Magis.

A recent Reddit post asks “Amateur athletes of Reddit: what’s your ‘There’s levels to this shit’ experience from your sport?” Responses included:

We have some good runners who can win local races … And then you realise that if you put them in a 5000m race with Olympic-level athletes they’d get lapped at least 3 time and possibly 4 times.

Former NHL player putting in 10% effort was a harder, faster shot than mid-high tier beer league at 110%. The average adult player’s ceiling is buried somewhere deep under the worst NHL player’s basement floor.

And the thread includes more than one reference to Brian Scalabrine, who played in the NBA but was not a star. After he retired, he participated in a “Scallenge” where he played one-on-one against talented amateur players — and beat them by a combined score of 44-6. Explaining the gap in ability between the best amateurs and the worse professionals, he said “I’m way closer to LeBron James than you are to me.”

Brian Scalabrine is probably right, because professionals in many areas — not just athletics — really are on another level. And then there’s another level above that, and a level above that, too.

This phenomenon is surprising because it violates our intuition for the distribution of ability. We expect something like a bell curve — the Gaussian distribution — and what we get is a lognormal distribution with a tail that extends much, much farther.

This is the topic of Chapter 4 of Probably Overthinking It, where I show some examples and propose two explanations. To celebrate the imminent release of the paperback edition, here’s an excerpt (or, if you prefer video, I gave a talk based on this chapter).

Running Speeds

If you are a fan of the Atlanta Braves, a Major League Baseball team, or if you watch enough videos on the internet, you have probably seen one of the most popular forms of between-inning entertainment: a foot race between one of the fans and a spandex-suit-wearing mascot called the Freeze.

The route of the race is the dirt track that runs across the outfield, a distance of about 160 meters, which the Freeze runs in less than 20 seconds. To keep things interesting, the fan gets a head start of about 5 seconds. That might not seem like a lot, but if you watch one of these races, this lead seems insurmountable. However, when the Freeze starts running, you immediately see the difference between a pretty good runner and a very good runner. With few exceptions, the Freeze runs down the fan, overtakes them, and coasts to the finish line with seconds to spare.

But as fast as he is, the Freeze is not even a professional runner; he is a member of the Braves’ ground crew named Nigel Talton. In college, he ran 200 meters in 21.66 seconds, which is very good. But the 200 meter collegiate record is 20.1 seconds, set by Wallace Spearmon in 2005, and the current world record is 19.19 seconds, set by Usain Bolt in 2009.

To put all that in perspective, let’s start with me. For a middle-aged man, I am a decent runner. When I was 42 years old, I ran my best-ever 10 kilometer race in 42:44, which was faster than 94% of the other runners who showed up for a local 10K. Around that time, I could run 200 meters in about 30 seconds (with wind assistance).

But a good high school runner is faster than me. At a recent meet, the fastest girl at a nearby high school ran 200 meters in about 27 seconds, and the fastest boy ran under 24 seconds.

So, in terms of speed, a fast high school girl is 11% faster than me, a fast high school boy is 12% faster than her; Nigel Talton, in his prime, was 11% faster than him, Wallace Spearmon was about 8% faster than Talton, and Usain Bolt is about 5% faster than Spearmon.

Unless you are Usain Bolt, there is always someone faster than you, and not just a little bit faster; they are much faster. The reason, as you might suspect by now, is that the distribution of running speed is not Gaussian. It is more like lognormal.

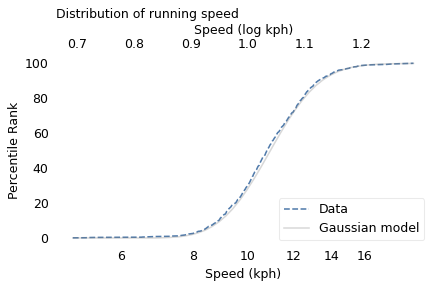

To demonstrate, I’ll use data from the James Joyce Ramble, which is the 10 kilometer race where I ran my previously-mentioned personal record time. I downloaded the times for the 1,592 finishers and converted them to speeds in kilometers per hour. The following figure shows the distribution of these speeds on a logarithmic scale, along with a Gaussian model I fit to the data.

The logarithms follow a Gaussian distribution, which means the speeds themselves are lognormal. You might wonder why. Well, I have a theory, based on the following assumptions:

- First, everyone has a maximum speed they are capable of running, assuming that they train effectively.

- Second, these speed limits can depend on many factors, including height and weight, fast- and slow-twitch muscle mass, cardiovascular conditioning, flexibility and elasticity, and probably more.

- Finally, the way these factors interact tends to be multiplicative; that is, each person’s speed limit depends on the product of multiple factors.

Here’s why I think speed depends on a product rather than a sum of factors. If all of your factors are good, you are fast; if any of them are bad, you are slow. Mathematically, the operation that has this property is multiplication.

For example, suppose there are only two factors, measured on a scale from 0 to 1, and each person’s speed limit is determined by their product. Let’s consider three hypothetical people:

- The first person scores high on both factors, let’s say 0.9. The product of these factors is 0.81, so they would be fast.

- The second person scores relatively low on both factors, let’s say 0.3. The product is 0.09, so they would be quite slow.

So far, this is not surprising: if you are good in every way, you are fast; if you are bad in every way, you are slow. But what if you are good in some ways and bad in others?

- The third person scores 0.9 on one factor and 0.3 on the other. The product is 0.27, so they are a little bit faster than someone who scores low on both factors, but much slower than someone who scores high on both.

That’s a property of multiplication: the product depends most strongly on the smallest factor. And as the number of factors increases, the effect becomes more dramatic.

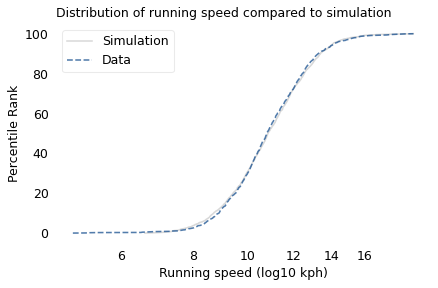

To simulate this mechanism, I generated five random factors from a Gaussian distribution and multiplied them together. I adjusted the mean and standard deviation of the Gaussians so that the resulting distribution fit the data; the following figure shows the results.

The simulation results fit the data well. So this example demonstrates a second mechanism that can produce lognormal distributions: the limiting power of the weakest link. If there are at least five factors that affect running speed, and each person’s limit depends on their worst factor, that would explain why the distribution of running speed is lognormal.

I suspect that distributions of many other skills are also lognormal, for similar reasons. Unfortunately, most abilities are not as easy to measure as running speed, but some are. For example, chess-playing skill can be quantified using the Elo rating system, which we’ll explore in the next section.

Chess Rankings

In the Elo chess rating system, every player is assigned a score that reflects their ability. These scores are updated after every game. If you win, your score goes up; if you lose, it goes down. The size of the increase or decrease depends on your opponent’s score. If you beat a player with a higher score, your score might go up a lot; if you beat a player with a lower score, it might barely change. Most scores are in the range from 100 to about 3000, although in theory there is no lower or upper bound.

By themselves, the scores don’t mean very much; what matters is the difference in scores between two players, which can be used to compute the probability that one beats the other. For example, if the difference in scores is 400, we expect the higher-rated player to win about 90% of the time.

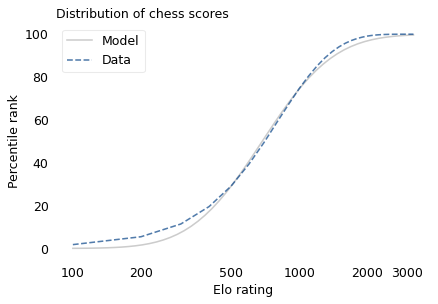

If the distribution of chess skill is lognormal, and if Elo scores quantify this skill, we expect the distribution of Elo scores to be lognormal. To find out, I collected data from Chess.com, which is a popular internet chess server that hosts individual games and tournaments for players from all over the world. Their leader board shows the distribution of Elo ratings for almost six million players who have used their service. The following figure shows the distribution of these scores on a log scale, along with a lognormal model.

The lognormal model does not fit the data particularly well. But that might be misleading, because unlike running speeds, Elo scores have no natural zero point. The conventional zero point was chosen arbitrarily, which means we can shift it up or down without changing what the scores mean relative to each other.

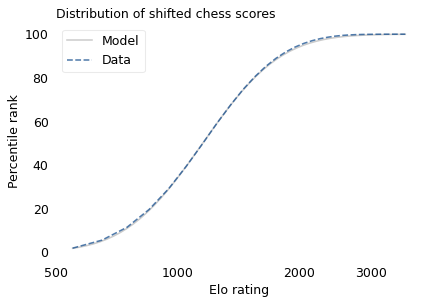

With that in mind, suppose we shift the entire scale so that the lowest point is 550 rather than 100. The following figure shows the distribution of these shifted scores on a log scale, along with a lognormal model.

With this adjustment, the lognormal model fits the data well.

Now, we’ve seen two explanations for lognormal distributions: proportional growth and weakest links. Which one determines the distribution of abilities like chess? I think both mechanisms are plausible.

As you get better at chess, you have opportunities to play against better opponents and learn from the experience. You also gain the ability to learn from others; books and articles that are inscrutable to beginners become invaluable to experts. As you understand more, you are able to learn faster, so the growth rate of your skill might be proportional to your current level.

At the same time, lifetime achievement in chess can be limited by many factors. Success requires some combination of natural abilities, opportunity, passion, and discipline. If you are good at all of them, you might become a world-class player. If you lack any of them, you will not. The way these factors interact is like multiplication, where the outcome is most strongly affected by the weakest link.

These mechanisms shape the distribution of ability in other fields, even the ones that are harder to measure, like musical ability. As you gain musical experience, you play with better musicians and work with better teachers. As in chess, you can benefit from more advanced resources. And, as in almost any endeavor, you learn how to learn.

At the same time, there are many factors that can limit musical achievement. One person might have a bad ear or poor dexterity. Another might find that they don’t love music enough, or they love something else more. One might not have the resources and opportunity to pursue music; another might lack the discipline and tenacity to stick with it. If you have the necessary aptitude, opportunity, and personal attributes, you could be a world-class musician; if you lack any of them, you probably can’t.

Outliers

If you have read Malcolm Gladwell’s book, Outliers, this conclusion might be disappointing. Based on examples and research on expert performance, Gladwell suggests that it takes 10,000 hours of effective practice to achieve world-class mastery in almost any field.

Referring to a study of violinists led by the psychologist K. Anders Ericsson, Gladwell writes:

The striking thing […] is that he and his colleagues couldn’t find any ‘naturals,’ musicians who floated effortlessly to the top while practicing a fraction of the time their peers did. Nor could they find any ‘grinds,’ people who worked harder than everyone else, yet just didn’t have what it takes to break the top ranks.”

The key to success, Gladwell concludes, is many hours of practice. The source of the number 10,000 seems to be neurologist Daniel Levitin, quoted by Gladwell:

“In study after study, of composers, basketball players, fiction writers, ice skaters, concert pianists, chess players, master criminals, and what have you, this number comes up again and again. […] No one has yet found a case in which true world-class expertise was accomplished in less time.”

The core claim of the rule is that 10,000 hours of practice is necessary to achieve expertise. Of course, as Ericsson wrote in a commentary, “There is nothing magical about exactly 10,000 hours”. But it is probably true that no world-class musician has practiced substantially less.

However, some people have taken the rule to mean that 10,000 hours is sufficient to achieve expertise. In this interpretation, anyone can master any field; all they have to do is practice! Well, in running and many other athletic areas, that is obviously not true. And I doubt it is true in chess, music, or many other fields.

Natural talent is not enough to achieve world-level performance without practice, but that doesn’t mean it is irrelevant. For most people in most fields, natural attributes and circumstances impose an upper limit on performance.

In his commentary, Ericsson summarizes research showing the importance of “motivation and the original enjoyment of the activities in the domain and, even more important, […] inevitable differences in the capacity to engage in hard work (deliberate practice).” In other words, the thing that distinguishes a world-class violinist from everyone else is not 10,000 hours of practice, but the passion, opportunity, and discipline it takes to spend 10,000 hours doing anything.

The Greatest of All Time

Lognormal distributions of ability might explain an otherwise surprising phenomenon: in many fields of endeavor, there is one person widely regarded as the Greatest of All Time or the G.O.A.T.

For example, in hockey, Wayne Gretzky is the G.O.A.T. and it would be hard to find someone who knows hockey and disagrees. In basketball, it’s Michael Jordan; in women’s tennis, Serena Williams, and so on for most sports. Some cases are more controversial than others, but even when there are a few contenders for the title, there are only a few.

And more often than not, these top performers are not just a little better than the rest, they are a lot better. For example, in his career in the National Hockey League, Wayne Gretzky scored 2,857 points (the total of goals and assists). The player in second place scored 1,921. The magnitude of this difference is surprising, in part, because it is not what we would get from a Gaussian distribution.

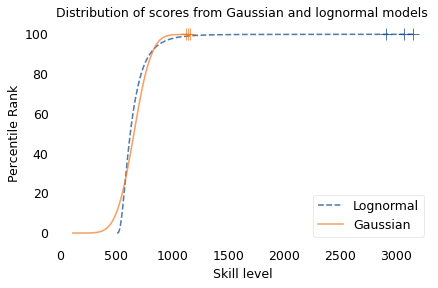

To demonstrate this point, I generated a random sample of 100,000 people from a lognormal distribution loosely based on chess ratings. Then I generated a sample from a Gaussian distribution with the same mean and variance. The following figure shows the results.

The mean and variance of these distributions is about the same, but the shapes are different: the Gaussian distribution extends a little farther to the left, and the lognormal distribution extends much farther to the right.

The crosses indicate the top three scorers in each sample. In the Gaussian distribution, the top three scores are 1123, 1146, and 1161. They are barely distinguishable in the figure, and and if we think of them as Elo scores, there is not much difference between them. According to the Elo formula, we expect the top player to beat the #3 player about 55% of the time.

In the lognormal distribution, the top three scores are 2913, 3066, and 3155. They are clearly distinct in the figure and substantially different in practice. In this example, we expect the top player to beat #3 about 80% of the time.

In reality, the top-rated chess players in the world are more tightly clustered than my simulated players, so this example is not entirely realistic. Even so, Garry Kasparov is widely considered to be the greatest chess player of all time. The current world champion, Magnus Carlsen, might overtake him in another decade, but even he acknowledges that he is not there yet.

Less well known, but more dominant, is Marion Tinsley, who was the checkers (aka draughts) world champion from 1955 to 1958, withdrew from competition for almost 20 years – partly for lack of competition – and then reigned uninterrupted from 1975 to 1991. Between 1950 and his death in 1995, he lost only seven games, two of them to a computer. The man who programmed the computer thought Tinsley was “an aberration of nature”.

Marion Tinsley might have been the greatest G.O.A.T. of all time, but I’m not sure that makes him an aberration. Rather, he is an example of the natural behavior of lognormal distributions:

- In a lognormal distribution, the outliers are farther from average than in a Gaussian distribution, which is why ordinary runners can’t beat the Freeze, even with a head start.

- And the margin between the top performer and the runner-up is wider than it would be in a Gaussian distribution, which is why the greatest of all time is, in many fields, an outlier among outliers.

The post It’s Levels appeared first on Probably Overthinking It.

Abstract:Transformer components such as non-linear activations and normalization are inherently non-injective, suggesting that different inputs could map to the same output and prevent exact recovery of the input from a model's representations. In this paper, we challenge this view. First, we prove mathematically that transformer language models mapping discrete input sequences to their corresponding sequence of continuous representations are injective and therefore lossless, a property established at initialization and preserved during training. Second, we confirm this result empirically through billions of collision tests on six state-of-the-art language models, and observe no collisions. Third, we operationalize injectivity: we introduce SipIt, the first algorithm that provably and efficiently reconstructs the exact input text from hidden activations, establishing linear-time guarantees and demonstrating exact invertibility in practice. Overall, our work establishes injectivity as a fundamental and exploitable property of language models, with direct implications for transparency, interpretability, and safe deployment.

If I mention nuclear reactor accidents, you’d probably think of Three Mile Island, Fukushima, or maybe Chernobyl (or, now, Chornobyl). But there have been others that, for whatever reason, aren’t as well publicized. Did you know there is an International Nuclear Event Scale? Like the Richter scale, but for nuclear events. A zero on the scale is a little oopsie. A seven is like Chernobyl or Fukushima, the only two such events at that scale so far. Three Mile Island and the event you’ll read about in this post were both level five events. That other level five event? The Windscale fire incident in October of 1957.

If you imagine this might have something to do with the Cold War, you are correct. It all started back in the 1940s. The British decided they needed a nuclear bomb project and started their version of the Manhattan Project called “Tube Alloys.” But in 1943, they decided to merge the project with the American program.

The British, rightfully so, saw themselves as co-creators of the first two atomic bombs. However, in post-World War paranoia, the United States shut down all cooperation on atomic secrets with the 1946 McMahon Act.

We Are Not Amused

The British were not amused and knew that to secure a future seat at the world table, it would need to develop its own nuclear capability, so it resurrected Tube Alloys. If you want a detour about the history of Britan’s bomb program, the BBC has a video for you that you can see below.

Of course, post-war Britain wasn’t exactly flush with cash, so they had to limit their scope a bit. While the Americans had built bombs with both uranium and plutonium, the UK decided to focus on plutonium, which could create a stronger bomb with less material.

Of course, that also means you have to create plutonium, so they built two reactors — or piles, as they were known then. They were both in the same location near Seascale, Cumberland.

Inside a Pile

The reactors were pretty simple. There was a big block of graphite with channels drilled through it horizontally. You inserted uranium fuel cartridges in one end, pushing the previous cartridge through the block until they fell out the other side into a pool of water.

The cartridges were encased in aluminum and had cooling fins. These things got hot! Immediately, though, practical concerns — that is, budgets — got in the way. Water cooling was a good idea, but there were problems. First, you needed ultra-pure water. Next, you needed to be close to the sea to dump radioactive cooling water, but not too close to any people. Finally, you had to be willing to lose a circle around the site about 60 miles in diameter if the worst happened.

The US facility at Hanford, indeed, had a 30-mile escape road for use if they had to abandon the site. They dumped water into the Columbia River, which, of course, turned out to be a bad idea. The US didn’t mind spending on pure water.

Since the British didn’t like any of those constraints, they decided to go with air cooling using fans and 400-foot-tall chimneys.

Our Heros

Most of us can relate to being on a project where the rush to save money causes problems. A physicist, Terence Price, wondered what would happen if a fuel cartridge split open. For example, one might miss the water pool on the other side of the reactor. There would be a fire and uranium oxide dust blowing out the chimney.

The idea of filters in each chimney was quickly shut down. Since the stacks were almost complete, they’d have to go up top, costing money and causing delays. However, Sir John Cockcroft, in charge of the construction, decided he’d install the filters anyway. The filters became known as Cockcroft’s Follies because they were deemed unnecessary.

So why are these guys the heroes of this story? It isn’t hard to guess.

A Rush to Disaster

The government wanted to quickly produce a bomb before treaties would prohibit them from doing so. That put them on a rush to get H-bombs built by 1958. There was no time to build more reactors, so they decided to add material to the fuel cartridges to produce tritium, including magnesium. The engineers were concerned about flammability, but no one wanted to hear it.

They also decided to make the fins of the cartridges smaller to raise the temperature, which was good for production. This also allowed them to stuff more fuel inside. Engineers again complained. Hotter, more flammable fuel. What could go wrong? When no one would listen, the director, Christopher Hinton, resigned.

The Inevitable

The change in how heat spread through the core was dangerous. But the sensors in place were set for the original patterns, so the increased heat went undetected. Everything seemed fine.

It was known that graphite tends to store some energy from neutron bombardment for later release, which could be catastrophic. The solution was to heat the core to a point where the graphite started to get soft, which would gradually release the potential energy. This was a regular part of operating the reactors. The temperature would spike and then subside. Operations would then proceed as usual.

By 1957, they’d done eight of these release cycles and prepared for a ninth. However, this one didn’t go as planned. Usually, the core would heat evenly. This time, one channel got hot and the rest didn’t. They decided to try the release again. This time it seemed to work.

As the core started to cool as expected, there was an anomaly. One part of the core was rising instead, reaching up to 400C. They sped up the fans and the radiation monitors determined that they had a leak up the chimney.

Memories

Remember the filters? Cockcroft”s Follies? Well, radioactive dust had gone up the chimney before. In fact, it had happened pretty often. As predicted, the fuel would miss the pool and burst.

With the one spot getting hotter, operators assumed a cartridge had split open in the core. They were wrong. The cartridge was on fire. The Windscale reactor was on fire.

With the one spot getting hotter, operators assumed a cartridge had split open in the core. They were wrong. The cartridge was on fire. The Windscale reactor was on fire.

Of course, speeding up the fans just made the fire worse. Two men donned protective gear and went to peek at an inspection port near the hot spot. They saw four channels of fuel glowing “bright cherry red”. At that point, the reactor had been on fire for two days. The Reactor Manager suited up and climbed the 80 feet to the top of the reactor building so he could assess the backside of the unit. It was glowing red also.

Fight Fire with ???

The fans only made the fire worse. They tried to push the burning cartridges out with metal poles. They came back melted and radioactive. The reactor was now white hot. They then tried about 25 tonnes of carbon dioxide, but getting it to where it was needed proved to be too difficult, so that effort was ineffective.

By the 11th of October, an estimated 11 tonnes of uranium were burning, along with magnesium in the fuel for tritium production. One thermocouple was reading 3,100C, although that almost had to be a malfunction. Still, it was plenty hot. There was fear that the concrete containment building would collapse from the heat.

You might think water was the answer, and it could have been. But when water hits molten metal, hydrogen gas results, which, of course, is going to explode under those conditions. They decided, though, that they had to try. The manager once again took to the roof and tried to listen for any indication that hydrogen was building up. A dozen firehoses pushed into the core didn’t make any difference.

Sci Fi

If you read science fiction, you probably can guess what did work. Starve the fire for air. The manager, a man named Tuohy, and the fire chief remained and sent everyone else out. If this didn’t work, they were going to have to evacuate the nearby town anyway.

They shut off all cooling and ventilation to the reactor. It worked. The temperature finally started going down, and the firehoses were now having an effect. It took 24 hours of water flow to get things completely cool, and the water discharge was, of course, radioactive.

If you want a historical documentary on the even, here’s one from Spark:

Aftermath

The government kept a tight lid on the incident and underreported what had been released. But there was much less radioactive iodine, cesium, plutonium, and polonium release because of the chimney filters. Cockcroft’s Folly had paid off.

While it wasn’t ideal, official estimates are that 240 extra cancer cases were due to the accident. Unofficial estimates are higher, but still comparatively modest. Also, there had been hushed-up releases earlier, so it is probably that the true number due to this one accident is even lower, although if it is your cancer, you probably don’t care much which accident caused it.

Milk from the area was dumped into the sea for a while. Today, the reactor is sealed up, and the site is called Sellafield. It still contains thousands of damaged fuel elements within. The site is largely stable, although the costs of remediating the area have been, and will continue to be staggering.

This isn’t the first nuclear slip-up that could have been avoided by listening to smart people earlier. We’ve talked before about how people tend to overestimate or sensationalize these kinds of disasters. But it still is, of course, something you want to avoid.

Featured image: “HD.15.003” by United States Department of Energy

At Olin College recently, I met with a group from the Kyiv School of Economics who are creating a new engineering program. I am very impressed with the work they are doing, and their persistence despite everything happening in Ukraine.

As preparation for their curriculum design process, they interviewed engineers and engineering students, and they identified two recurring themes: passion and disappointment — that is, passion for engineering and disappointment with the education they got.

One of the professors, reflecting on her work experience, said she thought her education had given her a good theoretical foundation, but when she went to work, she found that it did not apply — she felt like she was starting from scratch.

I suggested that if a “good theoretical foundation” is not actually good preparation for engineering work, maybe it’s not actually a foundation — maybe it’s just a hoop for the ones who can jump through it, and a barrier for the ones who can’t.

The engineering curriculum is based on the assumption that math (especially calculus) and science (especially physics) are (1) the foundations of engineering, and therefore (2) the prerequisites of engineering education. Together, these assumptions are what I call the Foundation Fallacy.

To explain what I mean, I’ll use an example that is not exactly engineering, but it demonstrates the fallacy and some of the rhetoric that sometimes obscures it.

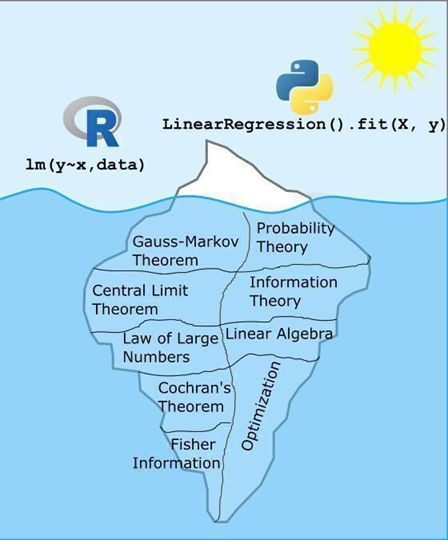

A recent post on LinkedIn includes this image:

And this text:

What makes a data scientist a data scientist? Is it their ability to use R or Python to solve data problems? Partially. But just like any tool, I’d rather those making decisions with data truly understand the tools they’re using so that when something breaks, they can diagnose it.

As the image shows, running a linear regression in R or Python is just the tip of the iceberg. What lies beneath, including the theory, assumptions, and reasoning that make those models work, is far more substantial and complex.

ChatGPT can write the code. But it’s the data scientist who decides whether that model is appropriate, interprets the results, and translates them into sound decisions. That’s why I don’t just hand my students an R function and tell them to use it. We dig into why it works, not just that it works. The questions and groans I get along the way are all part of the process, because this deeper understanding is what truly sets a data scientist apart.

Most of the replies to this post, coming from people who jumped through the hoops, agree. The ones who hit a barrier, and the ones groaning in statistics classes, might have a different opinion.

I completely agree that choosing models, interpreting results, and making sound decisions are as important as programming skills. But I’m not sure the things in that iceberg actually develop those skills — in fact, I am confident they don’t.

And maybe for someone who knows these topics, “when something breaks, they can diagnose it.” But I’m not sure about that either — and I am quite sure it’s not necessary. You can understand multiple collinearity without a semester of linear algebra. And you can get what you need to know about AIC without a semester of information theory.

For someone building a regression model, a high-level understanding of causal inference is a lot more useful than the Gauss-Markov theorem. Also more useful: domain knowledge, understanding the context, and communicating the results. Maybe math and science classes could teach these topics, but the ones in this universe really, really don’t.

Everything I just said about linear regression also applies to engineering. Good engineers understand context, not just technology; they understand the people who will interact with, and be affected by, the things they build; and they can communicate effectively with non-engineers.

In their work lives, engineers hardly ever use calculus — more often they use computational tools based on numerical methods. If they know calculus, does that knowledge help them use the tools more effectively, or diagnose problems? Maybe, but I really doubt it.

My reply to the iceberg analogy is the car analogy: you can drive a car without knowing how the engine works. And knowing how the engine works does not make you a better driver. If someone is passionate about driving, the worst thing we can do is make them study thermodynamics. The best thing we can do is let them drive.

The post The Foundation Fallacy appeared first on Probably Overthinking It.